Table of Contents:

– Background

– Testing Methodology: Adjustments, Reticle Size, Reticle Cant

– Testing Methodology: Comparative Optical Evaluation

Background

At some point in decade or so of testing optics I have now done I began to notice that there were aspects of optical performance I had overlooked specifically testing in one review that I noticed I had tested and mentioned in others. This was usually in the form of not having any notes on something like the chromatic aberration of scope A when I mentioned it about scope B. The reason for this might be, and probably was, that A didn’t have much of an issue with CA. Still, I realized a standard operating procedure would improve the quality, impartiality, and probably also speed of my work. I began to come up with checklists of tests and evaluation points to use while evaluating optics. Over time I have added to, and refined, these procedures and I will continue to do so. Furthermore, I realized that others might be interested in my testing methodology and that any good student of the sciences should provide such information as a matter of fidelity to the field. I did go to University in this stuff, learned how to do it, and personally value these practices on a deep philosophical level. It was a bit slow of me not to have done things in this systematized way from day 1. Be that as it may, this is the testing methodology I followed in 2015- 2018.

Testing Methodology: Adjustments, Reticle Size, Reticle Cant

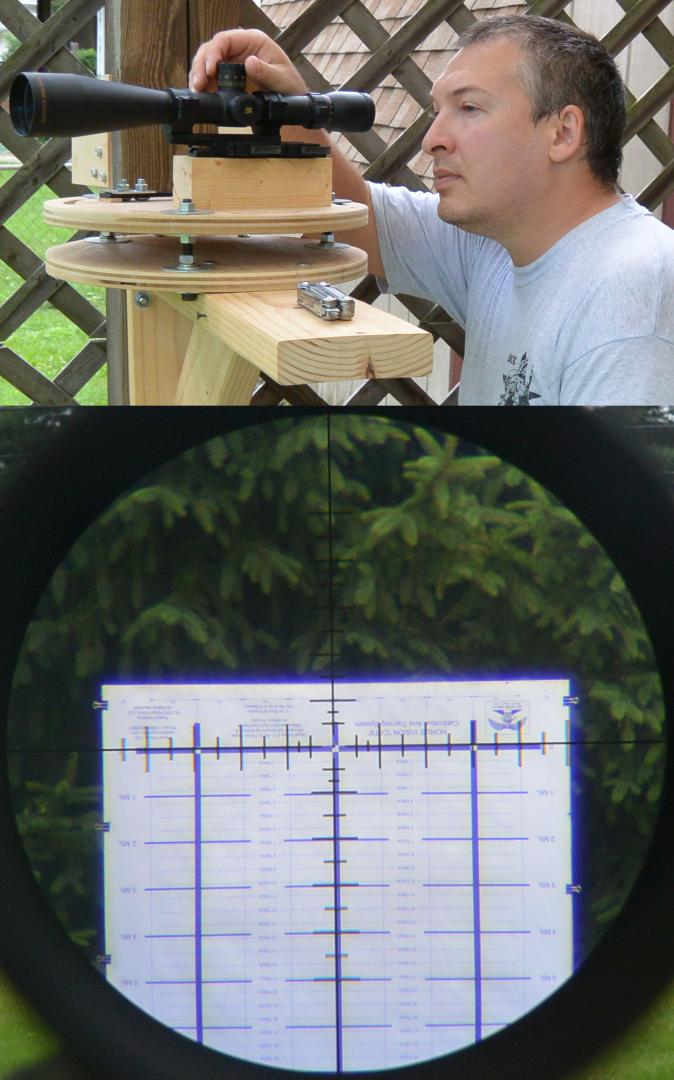

When testing scope adjustments, I use the adjustable V-block on the right of the test rig to first center the erector. Approximately .2 or so mil of deviation is allowed from center in the erector as it is difficult to do better than this because the adjustable V-block has some play in it. The erector can be centered with the scope mounted or not mounted. If it started unmounted, I mount it after centering. I next set the zero stop (on scopes with such a feature) to this centered erector and attach the optic to the rail on the left side of the test rig.

The three fine threaded 7/16″ bolts on the rig allow the scope to be aimed precisely at an 8’x3′ Horus CATS 280F target 100 yds downrange as measured by a quality fiberglass tape measure. The target is also trued to vertical with a bubble level. The reticle is aimed such that its centerline is perfectly aligned with the centerline of the target and it is vertically centered on the 0 mil elevation line.

The CATS target is graduated in both mils and true MOA and calibrated for 100 yards. The target is mounted upside-down on a target backer designed specifically for this purpose as the target was designed to be fired at rather than being used in conjunction with a stationary scope. (Since up for bullet impact means down for reticle movement on the target, the inversion is necessary.) With the three bolts tightened on the test rig head, the deflection of the rig is about .1 mil under the force required to move adjustments. The rig immediately returns to zero when the force is removed. It is a very solid, very precise test platform. These bolts allow the scope to be precisely positioned such that its reticle is perfectly aligned with the test target prior to moving the adjustments. Each click of movement in the scope adjustments moves the reticle on the target and this can observed by the tester as it actually happens during the test: it’s quite a lot of fun if you are a bit of a nerd like I am! After properly setting the parallax to the target (head bob method) and diopter (after the parallax), I move the elevation adjustment though the range from erector center until it stops, making note every 5 mils of adjustment dialed of any deviation in the position of the reticle on the target relative to where it should be and also making note of the total travel and any excess travel in the elevation knob after the reticle stops moving but before the knob stops. At the extent of this travel I can also determine the cant of the reticle by measuring how far off of the target centerline the reticle has moved. I next reverse the adjustment process and go back down to zero. This is done several times to verify consistency with any notes taken of changes. After testing the elevation adjustments in this manner, the windage adjustments are tested out to 4 mils each way in similar fashion using the same target and basically the same method. The elevation and windage are then tested in conjunction with one another by making a large box 8 mil wide and as tall as the adjustments will allow. If the scope is one where it is easy to do so (not a pin type zero stop model), I next re-align the test rig to point the scope at the bottom of the target and test the elevation in the other direction for tracking and range. After concluding the testing of adjustments, I also test the reticle size calibration. This is done quite easily on this same target by comparing the reticle markings to those on the target.

Testing a single scope of a given model from a given manufacturer, which is really all that is feasible, is not meant to be indicative of all scopes from that maker. Accuracy of adjustments, reticle size, and cant will differ from scope to scope. After testing a number of scopes, I have a few theories as to why. As designed on paper, I doubt that any decent scope has flaws resulting in inaccurate clicks in the center of the adjustment range. Similarly, I expect few scopes are designed with inaccurate reticle sizes (and I don’t even know how you would go about designing a canted reticle as the reticle is etched on a round piece of glass and cant simply results from it being rotated incorrectly when positioned). However, ideal designs aside, during scope assembly the lenses are positioned by hand and will be off by this much or that much. This deviation in lens position from design spec can cause the reticle size or adjustment magnitude to be incorrect and, I believe, is the reason for these problems in most scopes. Every scope maker is going to have a maximum acceptable amount of deviation from spec that is acceptable to them and I very much doubt they would be willing to tell you what this number is, or better yet, what the standard of deviation is. The tighter the tolerance, the better from the standpoint of the buyer, but also the longer average time it will take to assemble a scope and, therefore, the higher the cost. Assembly time is a major cost in scope manufacture. It is actually the reason that those S&B 1-8x short dots took years to make it to market. Tolerances are a particular concern for scopes that have high magnification ratios and also for those that are short in length. Both of these design attributes tend to make assembly very touchy. This should make you, the buyer, particularly careful to test purchased scopes that have these desirable attributes, as manufacturers will face greater pressure on these types to allow looser standards. If you test your scope and find it lacking, I expect that you will not have too much difficulty in convincing a maker with a reputation for good customer service to remedy it: squeaky wheel gets the oil and all that. Remember that some deviations, say a scope’s adjustments being 1% too large or small, are easy to adjust for in ballistic software, whereas others, a large reticle cant for instance, are not.

Before I leave adjustments, reticle size, and reticle cant, I will give you some general trends I have noticed so far. The average adjustment deviation seems to vary on many models with distance from optical center. This is a good endorsement for a 20 MOA base, as it will keep you closer to center for longer. The average deviation for a scope’s elevation seems to be about .1% at 10 mils. Reticle size deviation is sometimes found to vary with adjustments so that both the reticle and adjustments are off in the same way and with similar magnitude. This makes them agree with each other when it comes to follow up shots. I expect this is caused by the error in objective lens position affecting both the same. In scopes that have had a reticle with error, it has been of this variety, but fewer scopes have this issue than have adjustments that are off. Reticle size deviation does not appear to vary in magnitude as you move from erector center although adjustment deviation often does. The mean amount of reticle error is less than .05%. Reticle cant mean is about .05 degrees. Reticle cant, it should be noted, affects the shooter as a function of calculated drop and can easily get lost in the windage read. As an example, a 1 degree cant equates to about 21 cm at 1000 meters with a 168 gr .308 load that drops 12.1 mil at that distance. That is a lot of drop, and a windage misread of 1 mph is of substantially greater magnitude (more than 34 cm) than our example reticle cant-induced error. This type of calculation should be kept in mind when examining all mechanical and optical deviations in a given scope: a deviation is really only important if it is of a magnitude similar to the deviations expected to be introduced by they shooter, conditions, rifle, and ammunition. Lastly, the proliferation of “humbler” type testing units such as mine appears to have resulted in scope companies improving their QC standards. I see less deviation in products now then a few years ago.

Testing Methodology: Comparative Optical Evaluation

The goal of my optical performance evaluation is NOT to attempt to establish some sort of objective ranking system. There are a number of reasons for this. Firstly, it is notoriously difficult to measure optics in an objective and quantifiable way. Tools, such as MTF plots, have been devised for that purpose, primarily by the photography business. Use of such tools for measuring rifle scopes is complicated by the fact that scopes do not have any image recording function and therefore a camera must be used in conjunction with the scope. Those who have taken through-the-scope pictures will understand the image to image variance in quality and the ridiculousness of attempting to determine quality of the scope via images so obtained. Beyond the difficulty of applying objective and quantifiable tools from the photography industry to rifle scopes, additional difficulties are encountered in the duplication of repeatable and meaningful test conditions. Rifle scopes are designed to be used primarily outside, in natural lighting, and over substantial distances. Natural lighting conditions are not amenable to repeat performances. This is especially true if you live in central Ohio, as I do. Without repeatable conditions, analysis tools have no value, as the conditions are a primary factor in the performance of the optic. Lastly, the analysis of any data gathered, even if such meaningful data were gathered, would not be without additional difficulties. It is not immediately obvious which aspects of optical performance, such as resolution, color rendition, contrast, curvature of field, distortion, and chromatic aberration, should be considered of greater or lesser importance. For such analysis to have great value, not only would a ranking of optical aspects be in order, but a compelling and decisive formula would have to be devised to quantitatively weigh the relative merits of the different aspects. Suffice it to say, I have neither the desire nor the resources to embark on such a multi-million dollar project and, further, I expect it would be a failure anyway as, in the end no agreement will be reached on the relative weights of different factors in analysis.

The goal of my optical performance evaluation is instead to help the reader get a sense of the personality of a particular optic. Much of the testing documents the particular impressions each optic makes on the tester. An example of this might be a scope with a particularly poor eyebox behind which the user notices he just can’t seem to get to a point where the whole image is clear. Likewise, a scope might jump out to the tester as having a very bad chromatic aberration problem that makes it difficult to see things clearly as everything is fringed with odd colors. Often these personality quirks mean more to the users’ experience than any particular magnitude of resolution number would. My testing seeks to document the experience of using a particular scope in such a way that the reader will form an impression similar to that of the tester with regard to like or dislike and will be aware of the reasons for that impression.

The central technique utilized for this testing is comparative observation. One of the test heads designed for my humbler apparatus consists of five V-blocks of which four are adjustable. This allows each of the four scopes on the adjustable blocks to be aimed such that they are collinear with the fifth. For the majority of the testing, each scope is then set to the same power (the highest power shared by all as a rule). Though power numbers are by no means accurately marked, an approximation will be obtained. Each scope will have the diopter individually adjusted by the tester, the adjustments centered optically, and the parallax set. A variety of targets, including both natural backdrops and optical test targets, will be observed through the plurality of optics with the parallax being adjusted for each optic at each target. A variety of lighting conditions over a variety of days will be utilized. Specific notes are made regarding: resolution, color rendition, contrast, field of view, edge to edge quality, light transmission, pincushion and barrel distortion, chromatic aberration, tunneling, depth of field, eyebox, stray light handling, and optical flare. The observations through all of these sessions will be combined in the way that the tester best believes conveys his opinion of the optic’s performance and explains the reasons why.