Depends. The OP may go off his meds again, showing his ass and tender feelers at the same time. Creating the need for a delete to avoid looking like a total asshat...

Join the Hide community

Get access to live stream, lessons, the post exchange, and chat with other snipers.

Register

Download Gravity Ballistics

Get help to accurately calculate and scope your sniper rifle using real shooting data.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Great feedback from “the hide” members

- Thread starter Sniper King 2020

- Start date

Reason for keeping is the explanation posts from others. The other threads getting deleted lost some good. Acting like a retard on the internet should hurt some. Could they have been cleaned up? Probably good for a technical thread.Depends. The OP may go off his meds again, showing his ass and tender feelers at the same time. Creating the need for a delete to avoid looking like a total asshat...

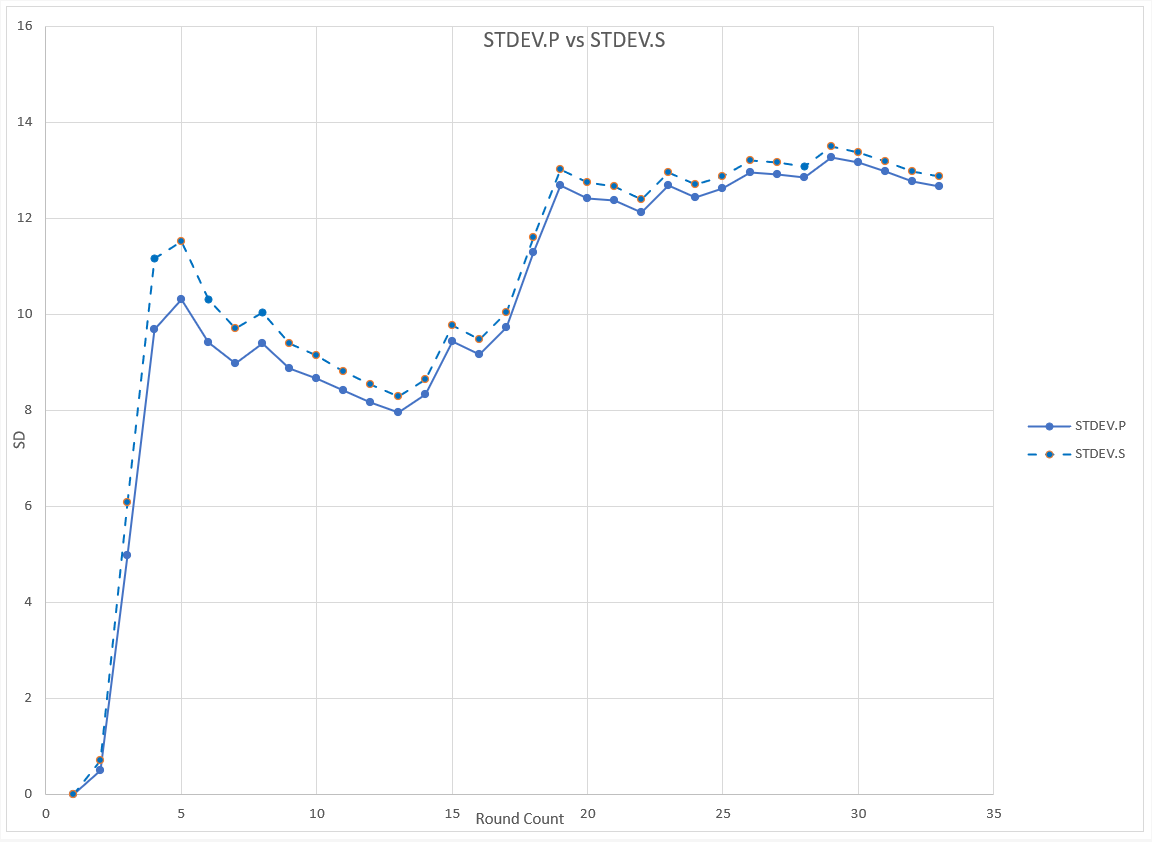

Pragmatically, it doesn't matter if you are 'sampling' enough shots to get a good idea of your actual SD. At least in my own experience, you need to have about 20-rounds +/- in order to get a good idea of your SD. The further below that you drop in terms of round count, the further into the noise you'll find yourself.

Here's a real life example with 33 rounds:

2628

2627

2638

2611

2612

2623

2617

2635

2625

2631

2630

2620

2620

2637

2645

2630

2610

2599

2596

2617

2611

2626

2600

2625

2638

2602

2609

2610

2598

2629

2615

2621

2629

STDEV.P = 12.665 fps

STDEV.S = 12.862 fps

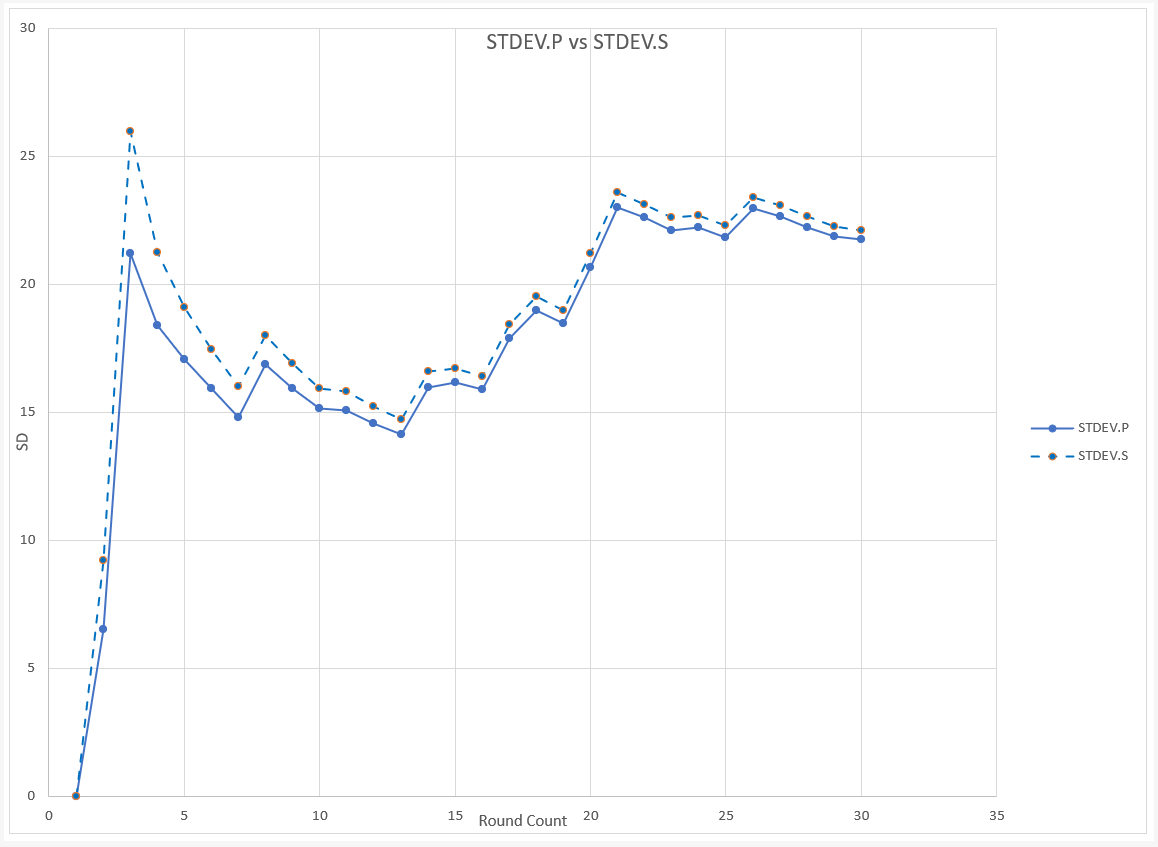

Here's another 30 rounds from a different rifle (different cartridge, different ammo, different day)

2889

2876

2839

2865

2856

2856

2860

2834

2855

2860

2874

2868

2868

2894

2883

2854

2903

2900

2869

2915

2923

2862

2870

2848

2881

2915

2886

2877

2881

2892

STDEV.P = 21.732 fps

STDEV.S = 22.104 fps

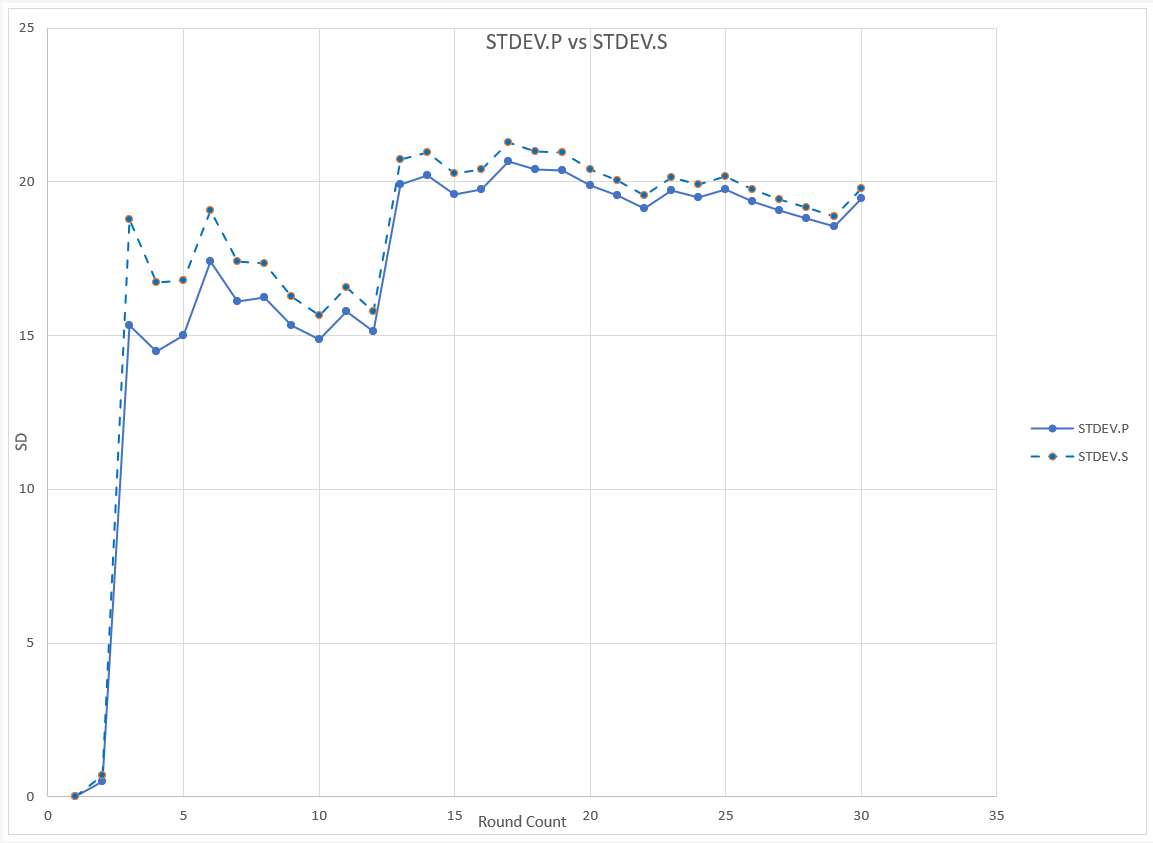

Here's a third example (different barrel/ammo from the 2 posted above):

2995

2996

3028

2993

3022

2978

3003

2984

3003

2990

2975

2995

3048

3025

3009

3026

3037

3022

3028

3005

2996

3007

3038

3023

3035

3010

3018

3021

3020

3049

STDEV.P = 19.455 fps

STDEV.S = 19.788 fps

Once you get about 20 rounds+ (approximately) not only does the variation within each "method" become more stable, but the difference between the two "methods" becomes negligible from an application standpoint. It's really a non-issue unless you are one of these guys that thinks a 3-shot group is a meaningful test.

Here's a real life example with 33 rounds:

2628

2627

2638

2611

2612

2623

2617

2635

2625

2631

2630

2620

2620

2637

2645

2630

2610

2599

2596

2617

2611

2626

2600

2625

2638

2602

2609

2610

2598

2629

2615

2621

2629

STDEV.P = 12.665 fps

STDEV.S = 12.862 fps

Here's another 30 rounds from a different rifle (different cartridge, different ammo, different day)

2889

2876

2839

2865

2856

2856

2860

2834

2855

2860

2874

2868

2868

2894

2883

2854

2903

2900

2869

2915

2923

2862

2870

2848

2881

2915

2886

2877

2881

2892

STDEV.P = 21.732 fps

STDEV.S = 22.104 fps

Here's a third example (different barrel/ammo from the 2 posted above):

2995

2996

3028

2993

3022

2978

3003

2984

3003

2990

2975

2995

3048

3025

3009

3026

3037

3022

3028

3005

2996

3007

3038

3023

3035

3010

3018

3021

3020

3049

STDEV.P = 19.455 fps

STDEV.S = 19.788 fps

Once you get about 20 rounds+ (approximately) not only does the variation within each "method" become more stable, but the difference between the two "methods" becomes negligible from an application standpoint. It's really a non-issue unless you are one of these guys that thinks a 3-shot group is a meaningful test.

Pragmatically, it doesn't matter if you are 'sampling' enough shots to get a good idea of your actual SD. At least in my own experience, you need to have about 20-rounds +/- in order to get a good idea of your SD. The further below that you drop in terms of round count, the further into the noise you'll find yourself.

Here's a real life example with 33 rounds:

2628

2627

2638

2611

2612

2623

2617

2635

2625

2631

2630

2620

2620

2637

2645

2630

2610

2599

2596

2617

2611

2626

2600

2625

2638

2602

2609

2610

2598

2629

2615

2621

2629

STDEV.P = 12.665 fps

STDEV.S = 12.862 fps

View attachment 8323778

Here's another 30 rounds from a different rifle (different cartridge, different ammo, different day)

2889

2876

2839

2865

2856

2856

2860

2834

2855

2860

2874

2868

2868

2894

2883

2854

2903

2900

2869

2915

2923

2862

2870

2848

2881

2915

2886

2877

2881

2892

STDEV.P = 21.732 fps

STDEV.S = 22.104 fps

View attachment 8323784

Here's a third example (different barrel/ammo from the 2 posted above):

2995

2996

3028

2993

3022

2978

3003

2984

3003

2990

2975

2995

3048

3025

3009

3026

3037

3022

3028

3005

2996

3007

3038

3023

3035

3010

3018

3021

3020

3049

STDEV.P = 19.455 fps

STDEV.S = 19.788 fps

View attachment 8323955

Once you get about 20 rounds+ (approximately) not only does the variation within each "method" become more stable, but the difference between the two "methods" becomes negligible from an application standpoint. It's really a non-issue unless you are one of these guys that thinks a 3-shot group is a meaningful test.

That was another point of contention. In practical terms, it really doesn't matter if you use N or N-1. As long as you don't mix the two, your data analysis will give you the same answer. And once you fire enough shots, the two SD's converge pretty close.

But, he was adamant that everyone except Garmin was "wrong."

I think this is more like SPAM.Have y'all not ever heard the phrase "don't feed the troll"?

I see you've met my students.....I typically won’t debate anyone on here as I of all people have built thousands of models just to have my assumptions disproven by the data. Bayes just isn’t as good as it was sold to be and I’ll leave it at that.

I got out of data science because people were calling theirselves “Data Scientists” because they knew how to:

import sklearn.abc as abc

Or because they knew how to use and access analytical databases.

Then they get on LinkedIn and talk about overcoming impostor syndrome.

No, it’s because they aren’t what they sold themselves to be and they know it. The entire community is full of frauds.

I had graduate classes with “Data Scientists” who admitted they did not know mathematics or statistics well. They should have never been given the title. An entire community built on APIs, copy and pasting scripts, and using LLMs to produce their code. It’s embarrassing.

Yes I am on a break this semester because of surgery..

In reality I was burned out from the very thing you are talking about--privileged little bastards (Graduate students) can barely do a regression and definitely can't write a report.. I'll admit I am a weirdo and get a giant boner when we start tacking about hessians, backpropagation, etc... I watch Youtube videos on Math (current subject Riemann Geometry Metric Tensors for General Relativity) for recreation (And the entire bear pit goes : "That explains so much")

My own "data scientist" irritates the shit outta me calling me up everyday with his latest bug that he can't figure out (look at your data Moron) and I want to scream "READ THE FUCKING ERROR MESSAGE AND GOOGLE THAT SHIT", but I politely assist him. My postdoc is less irritating but is very much like you describe: cut and paste. Took her 6 months to do this experiment that I did in one afternoon. (She wanted a Transformer to my CNN because TRANSFORMERS!!!--oh look the loss is 0.02 better that was worth it and its not even statistically significant--guess the old boy knows his shit after all). Ok swap out the models and go. FFS what is the hold up.

Then the best part: I have a bitching new server that just came up Mid December--I had a PAIN getting it installed. I set them both up on a brand spanking new Dual GPU 24GB Cards (I know not that impressive, but coming from a 16GB single server--again big stiffy). They still haven't logged in a month later. I stayed up all night the first night going WEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEE like a 16year old with a hooker and a Ferarri.. abusing the shit outta that thing to "stress test it"

That's my story and I'm stickin to it.

I'd say we should get a beer and commiserate but I can't drink for a year as my stomach has gone missing.

Just waiting to find the right startup to cashout on myself. If you can't beat em, take the investors money and run....

OP can nuke it anytime until it’s locked, IIRC.Reason for keeping is the explanation posts from others. The other threads getting deleted lost some good. Acting like a retard on the internet should hurt some. Could they have been cleaned up? Probably good for a technical thread.

It’s nice knowing others are seeing the same issues. I thought I was going insane.I see you've met my students.....

Yes I am on a break this semester because of surgery..

In reality I was burned out from the very thing you are talking about--privileged little bastards (Graduate students) can barely do a regression and definitely can't write a report.. I'll admit I am a weirdo and get a giant boner when we start tacking about hessians, backpropagation, etc... I watch Youtube videos on Math (current subject Riemann Geometry Metric Tensors for General Relativity) for recreation (And the entire bear pit goes : "That explains so much")

My own "data scientist" irritates the shit outta me calling me up everyday with his latest bug that he can't figure out (look at your data Moron) and I want to scream "READ THE FUCKING ERROR MESSAGE AND GOOGLE THAT SHIT", but I politely assist him. My postdoc is less irritating but is very much like you describe: cut and paste. Took her 6 months to do this experiment that I did in one afternoon. (She wanted a Transformer to my CNN because TRANSFORMERS!!!--oh look the loss is 0.02 better that was worth it and its not even statistically significant--guess the old boy knows his shit after all). Ok swap out the models and go. FFS what is the hold up.

Then the best part: I have a bitching new server that just came up Mid December--I had a PAIN getting it installed. I set them both up on a brand spanking new Dual GPU 24GB Cards (I know not that impressive, but coming from a 16GB single server--again big stiffy). They still haven't logged in a month later. I stayed up all night the first night going WEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEE like a 16year old with a hooker and a Ferarri.. abusing the shit outta that thing to "stress test it"

That's my story and I'm stickin to it.

I'd say we should get a beer and commiserate but I can't drink for a year as my stomach has gone missing.

Just waiting to find the right startup to cashout on myself. If you can't beat em, take the investors money and run....

I remember when I built a CNN model on my 16GB laptop and even with small batches it wasn’t enough memory (even with resizing). What’s worse is you need a ton of images to even make a good model so we need even more memory. So, I feel your pain on the memory constraints.

Seems like a garden variety forum suicide post to me.Bryans' behavior here, when compared to his Youtube channel videos, is bazar. Is this cat posting here really Bryan? Is this confirmed?

I'm going to watch the Twilight Saga tonight after this. Wish me luck!Seems like a garden variety forum suicide post to me.

Yes, you`re right, it is indeed a conscious choice to be here. One of the things one learns to do in seven decades on the planet is how to discern and filter out the useful nuggets of information and advice while simultaneously ignoring the bullshit and the assholes that provide it.I already know that you two are going to have a great discussion about how mean the forum is that you make a conscious choice to be on

STDEV.S is the correct method. End of story.

The difference between the two (open google--cause statisticians don't use those terms, there is standard deviation (STDEV.P)--statisticians use sigma and then there is ESTIMATED standard deviation,(STDDEV.S) which is known a 's'.

Its like your measured values is "x" and the mean is "mu"

English variables mean "measured" and greek variables are "intrinsic"--properties of the distribution

Standard Deviation is the width on a Normal or Gaussian Distribution. The formula is:

View attachment 8323682

sigma is the Std Dev. mu (the u thingy) is the mean).

Its a complicated formula, but just know that sigma controls the width, and mu controls the location:

View attachment 8323683

That's pretty standard stuff, but that's pure THEORY (egads a running gag in my own posts). When you MEASURE something you are sampling from that theoretical distribution (you get points at random, from a random distribution). Assuming your distribution is normal (not always safe), you can estimate the TRUE Standard Deviation by using the Estimated Standard Deviation, s. They key here is there is some unknown TRUE sigma which we try and estimate by taking samples. The Estimated and True will only begin to approach equal once the number of samples becomes very very large.

So the TLDR version is STDEV.S would be the statistician's choice to ESTIMATE the standard deviation of your velocity.

Example: I ran created a distribution with mean 2800 and simga 10. I grabbed 5 samples at random, 10 times and took the STDEV.P--However, we KNOW its 10 (I made it!). Here are the results:

"True Method"

[13.275930373733024,

8.342655830573312,

7.23926247655942,

5.156843761443447,

8.550960467769086,

13.76093532000941,

3.2590625589140196,

7.783767404854264,

3.911747500033366,

7.031416934586289]

Estimated:

[14.842941390110614,

9.327372775023447,

8.09374150227517,

5.765526599966628,

9.560264439422538,

15.385193404759432,

3.6437427123280806,

8.702516519150631,

4.373466660444734,

7.861363121939068]

With the estimated std dev, you get a value that is higher (instead of dividing by num of samples, you divide by n-1 for math reasons--in reality using the "true" method induces a bias towards 0)--so you could get lucky or you could get unlucky.

OR

Based on the following random data I "sampled":

array([2794.70526224, 2816.48889134, 2797.31856425, 2785.55297754,

2791.62315356])

I would publish an s of 11.67 with 95% CI of (4.07, 14.80) meaning I am 95% sure the TRUE sigma lies on the interval 4.07 to 14.80

You use confidence intervals : since I have to publish shit for the dreaded peer review, I would say my estimated std dev is 10.4 (4.07, 14.80)

So I read that as a sample size of 5 means dick either way. But "s" is the correct method.

Also statisticians don't use excel, they use R, which is a filthy language.

I teach data science/AI so I use python:

Go to google colab and reproduce my results. I prob made a mistake, but I fuck it. That's how you think of this as a "statistician"

spaces are important in the code btw Due to RNG, your number may vary slightly from mine. I'm, not getting into psuedo-random numbers with howler monkies.

Google Colab

colab.research.google.com

[FONT=courier new]import numpy as np p=[] s=[] n=10 size=5 for i in range( n ): x =np.random.normal(loc=2800, scale=10.0, size=size) p.append(np.std(x)) tmp = (x-x.mean())**2 tmp = tmp.sum() tmp = 1/(size-1) * tmp tmp = np.sqrt(tmp) s.append(tmp) import matplotlib.pyplot as plt plt.scatter(np.linspace(0,9,10),p,label="True") plt.scatter(np.linspace(0,9,10),s,label="Estimated") plt.legend() plt.show() sigma=[] for i in range(100): tmp = np.random.choice(x,5,replace=True) print(tmp) sigma.append(np.sqrt((((tmp-tmp.mean())**2).sum())/4)) print(np.percentile(sigma,95)) print(np.percentile(sigma,5)) print(np.sqrt((((x-x.mean())**2)/4).sum()))[/FONT]

Anyone else get a boner from this, or is it just me?

P

Anyone else get a boner from this, or is it just me?

P

Just want to thank the hide members for their feedback to me. Very little has been meaningful and most has been comical: I hope y’all learn something from testing that incorporates statistical significance testing. https://youtube.com/@WitchDoctorPrecision?si=oNVYHOH0y6POeFj8

Anyone else find it hilarious that benchrest people hand out a trophy called "sniper king?" lol

I thought it was a joke at first... but nope... it's real.

I thought it was a joke at first... but nope... it's real.

Well, I vote for user name change to “Bryan the tard” of misfit toy island…..

Not directed at you…Weird. It was a joke.

Good to see yall triggered and looks like yall figured out the SD thing

be sure to join the FB page dedicated to y’all

be sure to join the FB page dedicated to y’all

www.facebook.com

www.facebook.com

Log into Facebook

Log into Facebook to start sharing and connecting with your friends, family, and people you know.

www.facebook.com

www.facebook.com

I forgot to mention that I am proud to live rent free in your mindsGood to see yall triggered and looks like yall figured out the SD thingbe sure to join the FB page dedicated to y’all

Log into Facebook

Log into Facebook to start sharing and connecting with your friends, family, and people you know.www.facebook.com

I forgot to mention that I am proud to live rent free in your mindsgood stuff kids. Keep it up!

You are the latest in line of retards that span over twenty years. This might be your first time but it is nothing new for the Hide.I forgot to mention that I am proud to live rent free in your mindsgood stuff kids. Keep it up!

Don’t you high road me, I’m a low road guy.I forgot to mention that I am proud to live rent free in your mindsgood stuff kids. Keep it up!

And 6 posts today. He’s talking to himself again, and still not liking the answers I bet.Private group, 1 member.

How can one even be a sniper with no proof whatsoever of bow kills, concrete work, Oakley ownership or Velcro Crocs?

Nothing snipery here unless math is performed while wearing a ghillie suit overseeing a convoy withdrawing from a village in Eritrea on a secret mission for a Wyoming Senator who squeals like a piggy.

Oh and.

Sirhr

Nothing snipery here unless math is performed while wearing a ghillie suit overseeing a convoy withdrawing from a village in Eritrea on a secret mission for a Wyoming Senator who squeals like a piggy.

Oh and.

Sirhr

Hold up, what am I missing out onVelcro Crocs

Hold up, what am I missing out on

Polishing them. In SF ROTC. Before renting a lambo on Haiti and cornering the scope market. All classic sniper tactics. C’mon man… get with the program!

STDEV.S is the correct method. End of story.

The difference between the two (open google--cause statisticians don't use those terms, there is standard deviation (STDEV.P)--statisticians use sigma and then there is ESTIMATED standard deviation,(STDDEV.S) which is known a 's'.

Its like your measured values is "x" and the mean is "mu"

English variables mean "measured" and greek variables are "intrinsic"--properties of the distribution

Standard Deviation is the width on a Normal or Gaussian Distribution. The formula is:

View attachment 8323682

sigma is the Std Dev. mu (the u thingy) is the mean).

Its a complicated formula, but just know that sigma controls the width, and mu controls the location:

View attachment 8323683

That's pretty standard stuff, but that's pure THEORY (egads a running gag in my own posts). When you MEASURE something you are sampling from that theoretical distribution (you get points at random, from a random distribution). Assuming your distribution is normal (not always safe), you can estimate the TRUE Standard Deviation by using the Estimated Standard Deviation, s. They key here is there is some unknown TRUE sigma which we try and estimate by taking samples. The Estimated and True will only begin to approach equal once the number of samples becomes very very large.

So the TLDR version is STDEV.S would be the statistician's choice to ESTIMATE the standard deviation of your velocity.

Example: I ran created a distribution with mean 2800 and simga 10. I grabbed 5 samples at random, 10 times and took the STDEV.P--However, we KNOW its 10 (I made it!). Here are the results:

"True Method"

[13.275930373733024,

8.342655830573312,

7.23926247655942,

5.156843761443447,

8.550960467769086,

13.76093532000941,

3.2590625589140196,

7.783767404854264,

3.911747500033366,

7.031416934586289]

Estimated:

[14.842941390110614,

9.327372775023447,

8.09374150227517,

5.765526599966628,

9.560264439422538,

15.385193404759432,

3.6437427123280806,

8.702516519150631,

4.373466660444734,

7.861363121939068]

With the estimated std dev, you get a value that is higher (instead of dividing by num of samples, you divide by n-1 for math reasons--in reality using the "true" method induces a bias towards 0)--so you could get lucky or you could get unlucky.

OR

Based on the following random data I "sampled":

array([2794.70526224, 2816.48889134, 2797.31856425, 2785.55297754,

2791.62315356])

I would publish an s of 11.67 with 95% CI of (4.07, 14.80) meaning I am 95% sure the TRUE sigma lies on the interval 4.07 to 14.80

You use confidence intervals : since I have to publish shit for the dreaded peer review, I would say my estimated std dev is 10.4 (4.07, 14.80)

So I read that as a sample size of 5 means dick either way. But "s" is the correct method.

Also statisticians don't use excel, they use R, which is a filthy language.

I teach data science/AI so I use python:

Go to google colab and reproduce my results. I prob made a mistake, but I fuck it. That's how you think of this as a "statistician"

spaces are important in the code btw Due to RNG, your number may vary slightly from mine. I'm, not getting into psuedo-random numbers with howler monkies.

Google Colab

colab.research.google.com

[FONT=courier new]import numpy as np p=[] s=[] n=10 size=5 for i in range( n ): x =np.random.normal(loc=2800, scale=10.0, size=size) p.append(np.std(x)) tmp = (x-x.mean())**2 tmp = tmp.sum() tmp = 1/(size-1) * tmp tmp = np.sqrt(tmp) s.append(tmp) import matplotlib.pyplot as plt plt.scatter(np.linspace(0,9,10),p,label="True") plt.scatter(np.linspace(0,9,10),s,label="Estimated") plt.legend() plt.show() sigma=[] for i in range(100): tmp = np.random.choice(x,5,replace=True) print(tmp) sigma.append(np.sqrt((((tmp-tmp.mean())**2).sum())/4)) print(np.percentile(sigma,95)) print(np.percentile(sigma,5)) print(np.sqrt((((x-x.mean())**2)/4).sum()))[/FONT]

Am I the only one that fapped to this?

So, he is starting thread and special Facebook page. And he is living our heads and we are triggered. Yep yep that stands up to scrutiny.

I rent this one by the week.Polishing them. In SF ROTC. Before renting a lambo on Haiti and cornering the scope market. All classic sniper tactics. C’mon man… get with the program!

Am I the only one that fapped to this?

I did....... n-1 times.

Good to see yall triggered and looks like yall figured out the SD thingbe sure to join the FB page dedicated to y’all

Log into Facebook

Log into Facebook to start sharing and connecting with your friends, family, and people you know.www.facebook.com

Attachments

Oh yeah?Chapter 16 Comparing Two Samples | An Introduction to Bayesian Reasoning and Methods

This textbook presents an introduction to Bayesian reasoning and methodsbookdown.org

Also calling it now: I technically don't have a math degree--I have a physics degree (couple of em).

I only TEACH data science and statistics at a D1 school.

Well, I once stayed at a Holiday Inn Express.

Is that your brain or are you just happy to see me?

We need to start handing out the Veer award againWhere’s the cock fag award?

Turkeytugger must not have a FB profile. I’m sure those two could jerk each other off for hours in a group like that.

What’s the probability that Flips will show up again…including the standard deviation?

What’s the probability that Flips will show up again…including the standard deviation?

The extreme spread will be wider.

Don’t awaken the whaleWhat’s the probability that Flips will show up again…including the standard deviation?

I feel attacked.

Just want to thank the hide members for their feedback to me. Very little has been meaningful and most has been comical: I hope y’all learn something from testing that incorporates statistical significance testing. https://youtube.com/@WitchDoctorPrecision?si=oNVYHOH0y6POeFj8

Actual picture of Bender in the Bear Pit.